LOD2 Sessie

I (--Pieter (overleg) Jan 29 15:10 (CET)) have updated the content of this wiki page with the topics we have discussed during the LOD2 afternoon session at the PiLOD event at the VU in Amsterdam.

Section 2 has been updated with the names of the participants of the session and section 3 has been updated with the feedback we got on our questions. Section 5 has been added with the other questions that were asked during the session.

Feel free to add or change any content on this wiki page if you feel that any relevant information is missing or wrong.

Introduction[bewerken]

We are currently setting up a PiLOD Data Platform for research, test and development purposes to support the themes and cases we are working on within the PiLOD context.

Additionally we would like to offer a publishing location for linked datasets that cannot be published via other data portals, the so-called orphan linked data sets. Data owners can then publish and maintain their linked data sets themselves with the help of linked data experts.

We have investigated our preferences in tooling and formats when it comes to open, linked and big data and we would like to work with e.g.:

- Hadoop

- Hive

- MongoDb

- JSON-LD

- Virtuoso

- OntoWiki

- SMW

See the next overview with clusters of functionalities, formats and tools (in Dutch).

Figure 1: PiLOD Data Platform Overview (source: Freshheads)

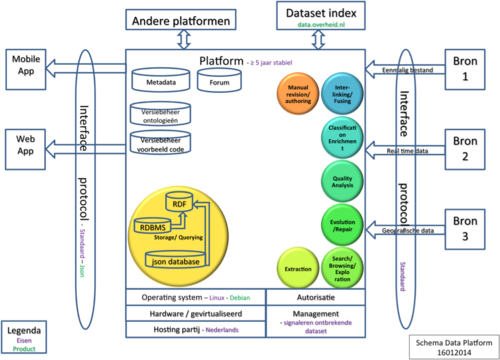

A second overview we have produced, is a Front End - Back End schema (in Dutch), which is another view on this topic looking at the different data sources, the required functionalitities of the platform to support full data cycles and what applications developers would like to create in the Frond End.

Figure 2: PiLOD Data Platform Schema (source: Gerard Persoon)

For the linked data part we have in our community experience with Google Refine, D2R, Topbraid Composer, Allegrograph, Virtuoso, Silk and other tools and we are looking for ways to broaden and deepen our “LOD literacy” with the experiences that are available in other data communities.

An important requirement in the selection of any tooling is that the software is fully open source and that it has an active and credible community, which uses and maintains the tooling, also in relation to other tooling that could be part of a Data Platform stack.

We are now building our PiLOD Data Platform from scratch, but we are very keen to find out, what we can do to reuse best practices and insights from existing data platforms, software stacks and data communities that could save us time, energy and money.

Hence our interest in the LOD2 stack that could possibly give us a jumpstart for our linked data activities and tooling requirements if we could reuse the parts of the LOD2 stack that would fit our Platform requirements. LOD2 has a lot of usable material available.

LOD2 session participants[bewerken]

The following text has been updated with the information we got from the session:

The following LOD2 experts were present at the session:

- Martin Kaltenböck (Semantic Web Company)

- Peter van Kleef (OpenLink Software)

- Sebastian Hellmann (Leipzig University/AKSW)

- Dimitris Kontokostas (Leipzig University/AKSW)

And their organization's websites:

- http://www.semantic-web.at/ (PoolParty and Unified Views)

- http://www.openlinksw.com/ (Virtuoso 7 RDF Store and Virtuoso Sponger)

- http://aksw.org (OntoWiki and other LOD2 tools)

And the following PiLOD members:

- Richard Naegelmaker

- Pieter van Everdingen

- Marcel van Mackelenberg

- Rob van Dort

- Gerard Persoon

- Christophe Guéret

- Dimitri van Hees

- John van Echtelt

- Marc van Opijnen

- John Walker

- Bert Spaan

- Thies Mesdag

- Remco de Boer

PiLOD questions[bewerken]

Our questions regarding LOD2 fall into the following categories:

LOD2 lessons learned?[bewerken]

Q: What can we learn from the LOD2 experiences in setting up a stack and platform that could save us time, energy and money.

- What took more time than expected?

- What were difficult topics to investigate and to decide upon?

- What were the most technical challenges?

- What would you do different now given your experiences?

A: We did talk about this as a separate topic during the afternoon session.

Raw data and Hadoop?[bewerken]

Q: We would like to use Hadoop for raw data. How can we make that work with the LOD2 Stack?

A: Hadoop is not part of the LOD2 stack. The components of the LOD2 stack comply to the W3C LOD standards and Hadoop does not comply to those standards. However Hadoop is used in Elsevier LOD2 environment in relation to Virtuoso. You might also want to use the Unified Views ETL tool or a SPARQL mapper to take Hadoop data to an LOD2 environment. And a MongDb (JSON) export to Virtuose can also be build. See also e.g.:

- SlideShare presentation: Elsevier Life Sciences: Smart Content Drives Smart Applications

[bewerken]

Q: We would like to make the data in our triple stores more accessible via domain-specific APi’s that we can use SPARQL queries with parameters in the front end. For instance, for the Base Administration Adresses and Buildings (the BAG registry) we would like to have pre-formatted Domicile (Dutch: woonplaats), Residency (Dutch: verblijfplaats) and Premises (Dutch: pand) SPARQL queries. Does LOD2 have any experience with scenarios like these, and do you know of any best practices we could look at to investigate this topic in further detail?

A: LOD2 does not contain such constructs, but it can become a best practices to build such API's on top of a triple store like Virtuoso. Also use context-sensitive mappings that you only show relevant details in the front end given your target audience of users. The LOD2 Stack does also not contain Google's Linked Data API (LDA) or the ELDA variant. But it would be good to look at these kinds of solutions in further detail. See also:

- http://code.google.com/p/linked-data-api/

- http://code.google.com/p/puelia-php/ (PHP implementation of LDA)

- http://code.google.com/p/elda/ (Java implementation of LDA)

- LinkedIn discussion: Much to do about JSON(-LD) (in Dutch/English)

Search and Elasticsearch?[bewerken]

Q: We would like to use Elasticsearch for searching all kinds of documents. How can we make that work with the LOD2 Stack? And we would also like to have facetted search?

A: Search will be further improved within the LOD2 Stack with a Solr-based solution. You can also look at SIREn. See also:

RDF endpoints discovery?[bewerken]

Q: There is no index for RDF endpoints. Given an app that uses multiple data sources, for instance a tourism app for the town of Rotterdam, how can you make the app to work properly that it knows which RDF endpoints to access? What are the LOD2 experience with this topic and do you know of any best practices?

A: We did not discuss this topic during the session

Stack [X] – Stack [Y] alignment?[bewerken]

Q: How can you make two stacks to work properly with each other? For instance the LOD2 Stack with the PiLOD Data Platform, which uses additional tooling. Would you need an index on top of the two stacks? And what else? Also from a developers point of view we would like to develop read-write interfaces using JSON-LD and maybe with tools like the Apache Marmotta and/or Callimachus (these tools are on our list for further investigation). And we also look at the W3C LOD best practices and the activities within the Linked Data Platform (LDP) Workgroup. LOD2 takes a data cycle as the anchoring mechanism to position the LOD2 software components in a logical structure, but what if you take an application lifecycle as starting point with the related tools. Can we make (or reuse when available) an overview then of how the LOD publishing, finding and consuming parts would meet each other in a new structure?

A: LOD2 is an open stack with mostly open source components that can be integrated with other tools.

Administrator topics[bewerken]

Note: The administrator topics were not discussed during the session.

What data?[bewerken]

No base registrations, we are not the official publication portal for base registrations, we might use copies or subsets from base registrations for development and testing purposes. We might give owners of orphan linked datasets a ‘home’ to publish their datasets in our environment (e.g. Energy labels that are available as linked data from the VU, but that should be maintained by their owners).

What tooling?[bewerken]

The list of tools we have determined so far during our PiLOD Data Platform session in Tilburg or would we like to extend this list with tooling from the LOD2 Stack if it fits our requirements?

What security?[bewerken]

What login policy? Login and/or 2-factor authentication? What user/administrator credentials? What are the LOD2 experiences with security? Etc.

What administrator tasks?[bewerken]

Who are allowed to install software packages? What are our policies regarding compiling software? Which administrators need root access? Where do we locate documentation on the platform?

What administrator team?[bewerken]

What kind of administrators do we need for PiLOD? What minimal number of administrators do you need? What are the LOD2 experiences on this topic? Which PiLOD participants want to do administrator tasks?

What administrator interface?[bewerken]

GUI and/or Unix prompt? What is used by LOD2 Stack administrators for the LOD2 Stack implementations in e.g. Austria.

LOD2 sandbox environment?[bewerken]

Q: To get more acquainted with the LOD2 Stack we would like to make use of a sandbox environment that we can make founded decisions on what tooling would fit with our requirements and which LOD2 packages we would need then.

A: We did not discuss this topic during the session

Prioritization of questions[bewerken]

We have prioritized the LOD2 questions, given the available time during the afternoon session, as follows:

- How to deal with tooling outside the LOD2 Stack, e.g. Hadoop, how could the interaction work from Hadoop to components from the LOD2 stack in the process of creating RDF from Hadoop data (paragraph 2.2 and 2.6).

- Navigation layer for triple stores (paragraph 2.3)

- Search (paragraph 2.4)

- RDF Endpoints discovery (paragraph 2.5)

- Lessons learned (paragraph 2.2)

The administrator questions can be limited to best practices on how to set up platform administration in such a way that it can be efficient as possible and least time consuming.

Additional questions and remarks[bewerken]

During the afternoon session several questions were asked that we did not prepare.

URI Explosion when converting relational databases to RDF?[bewerken]

Q: When we convert normalized data in relational databases to RDF we get an explosion of URI's that we don't want. One object gets many URI's given the details we would like to know from him including the changes that have happened over time (from-to dates/time stamping). What would be a best practices you recommend?

A: There is not one answer to that, but not every record field in a database should get a URI. In general it is good practice to break open structures and link them to make them more accessible. In any case you create a machine readable version of your database (documentation in RDF). This is a subject for further investigation. See also:

- PiLOD Wiki page: From Relational Database to Linked Data (in Dutch).

From data silo's to referenceable URI's[bewerken]

The text in this section also refers to some of the remarks that Phil Archer made during his presentation.

Q: Does all data needs to become linked data?

A: No, not all data need to become linked data. Use linked data when it is needed for the complexity you are dealing with. In some cases we are just trying to solve a problem where linked data is the only possible solution to solve that. We have no discussion on business cases then. See also:

- W3C presentation: LOD in Context (as presented in Amsterdam at the VU during the 2nd PiLOD event)

RDF triple store scalability?[bewerken]

Q: We expect to have some very large triple stores for some of the cases we are working on. What about the scalability of the LOD2 Stack components?

A: The Viruoso 7 triple store is built for scalability. It supports a clustered approach and uses various optimization techniques. See also:

- PDF-document: Open PHACTS: How Does It Work? (a large Linked Data application based upon Virtuoso, LDA and other components)

Data quality?[bewerken]

Q: Does the LOD2 stack offer any data quality services?

A: No, it is a good practice to improve the quality of data via crowdsourcing (feedback loop). E.g. Point of Interest (POI) information in Austria with the wrong height information was corrected by users of that data. Let people query your data to find anomalies in e.g. DBpedia articles and if they find any let them report that to you and stimulate them to find more anonomalies (if any) in related articles that might be of interest to them.

DBpedia has created a lot of sample queries that people can easily start building their own SPARQL queries.

Link extracting tools?[bewerken]

Q: Does the LOD2 Stack offer any link extracting tools like we use for our legislation data to find references to articles.

A: No, that is not part of the LOD2 Stack, but PiLOD can contact John Sheridan in the UK who is working on a similar project within the EU-context.

- http://www.johnlsheridan.com/ (personal website)

- http://2013.data-forum.eu/person/john-sheridan

Linked data applications?[bewerken]

Q: Are there any applications built with the LOD2 Stack similar to the "House Safe" (Dutch: Huiskluis).

A: No, the cadastral data is not open in Austria. But in general the city of Vienna is the city to watch when it comes to linked open data. They are the most active city in Austria when it comes to publishing datasets and developing applications. See the Austrian Open Government Data portal for more details:

LOD2 delivers horizontal technology that can be used for a large number of vertical cases.

LOD2 Stack support after June 2014?[bewerken]

Q: What will happen after June 2014 when the LOD2 program ends?

A: The EU-LOD2 program is working with several partners to keep the LOD2 community alive and to assure continuity in support for the LOD2 Stack after June 2014 when the LOD2 program ends. In any case support for the LOD2 components will be delivered by the LOD2 consortium partners for their specific components.

The LOD2 Stack becomes the Linked Data Stack in 2014. See also:

- Linked Data Stack (Home page)

LOD2 mailing list and LOD2 questions[bewerken]

Q: Where can we drop questions?

A: You can drop questions to the LOD2 team via the LOD2 site for e.g. new tools that you would like to add to LOD2 or you can contact your contacts at one of the LOD2 consortium partners directly for specific support questions.

LOD2 PUBLINK consultancy offer[bewerken]

Q: What support is available on the LOD2 Stack?

A : PUBLINK is a free LOD2 consultancy service backed up up by the LOD2 consortium partners, to help organizations to start working with the LOD2 Stack.

For us it would be very helpful to make use of this offer to get our platform to the next level of maturity with tools like Virtuoso 7, OntoWiki and the experiences in the LOD2 community with Hadoop in relation the Elsevier LOD2 activities.

References[bewerken]

LOD2 references[bewerken]

- http://lod2.eu (Home page)

- http://stack.lod2.eu/blog/ (LOD2 Stack overview)

- http://wiki.lod2.eu/display/LOD2DOC/16+-+3rd+release+of+the+LOD2+Stack (LOD2 Wiki)

- http://lod2.eu/BlogPost/webinar-series (overview of available webinars and presentations)

- http://demo.lod2.eu/lod2demo (LOD2 Demo environment)

Other references[bewerken]

- http://www.mongodb.org/

- http://hadoop.apache.org/

- http://www.elasticsearch.org/

- http://www.w3.org/TR/ldp/ (Linked Data Platform 1.0)

- http://www.w3.org/2012/ldp/wiki/Main_Page (LDP Working Group)

- http://www.w3.org/wiki/LDP_Implementations (LDP Implementations)

- http://callimachusproject.org/

- http://marmotta.apache.org/

These tools are prominent in our discussions when we talk about our PiLOD Data Platform and when we talk about development activities.