Boek/Hermans

< BoekHow to use LODRefine?

Auteur: Paul Hermans (ProXML)

Context

[bewerken]

Most of the data are not yet available as triples. They exist in tables, spreadsheets, ...; they are serialised as XML or JSON. The question arises how these can be transformed to RDF triples in an efficient way. A possible tool that can be of help is LODRefine, which is a specialised version of the, initially developed by Google, 'messy' data cleaning tool 'OpenRefine'.

OpenRefine

[bewerken]

OpenRefine (ex-Google Refine) is a powerful tool for working with messy data, cleaning it, transforming it from one format into another, extending it with web services, and linking it to databases like Freebase. The main functionalities of OpenRefine are:

- explore the contents of the dataset

- clean-up the dataset

- transform the dataset into other formats

- reconcile your data with external datasets using webservices

For more information on the basic non linked open data relatedfunctionalities, I refer to following documentation:

- http://openrefine.org/

- the Refine tutorial: http://bit.ly/15cOTmM

- http://bit.ly/18BA9zP

OpenRefine is an open system that allows to develop plugins to add additional functionalities. You will find the list of extensions here: https://github.com/OpenRefine/OpenRefine/wiki/Extensions.

One notices that some of these extensions are made specifically for bringing data into the linked open data web of for reconciling data with data already published on the web.

LODRefine

[bewerken]

Comes in LODRefine, which is a packaged version of OpenRefine that bundles these linked open data related extensions. We advise you to use this prepackaged version instead of assembling your own. You find LODRefine at http://code.zemanta.com/sparkica/.

Some tutorial material can be found at:

How to use LODRefine

[bewerken]

Input

[bewerken]

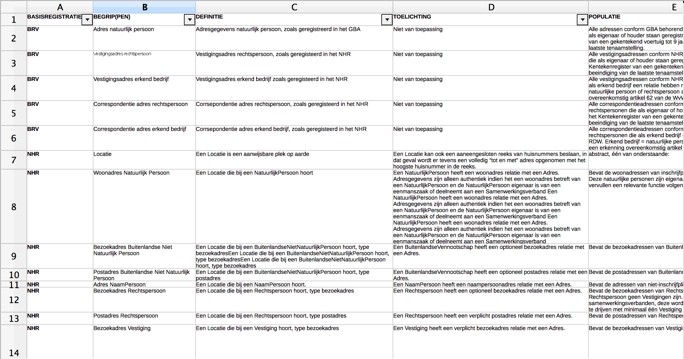

The datafile used in this procedure is a spreadsheet file.

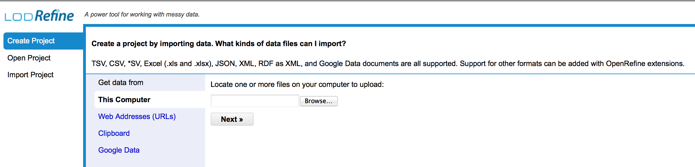

Opening LODRefine

[bewerken]

Browse to the spreadsheet file and click ‘Next’.

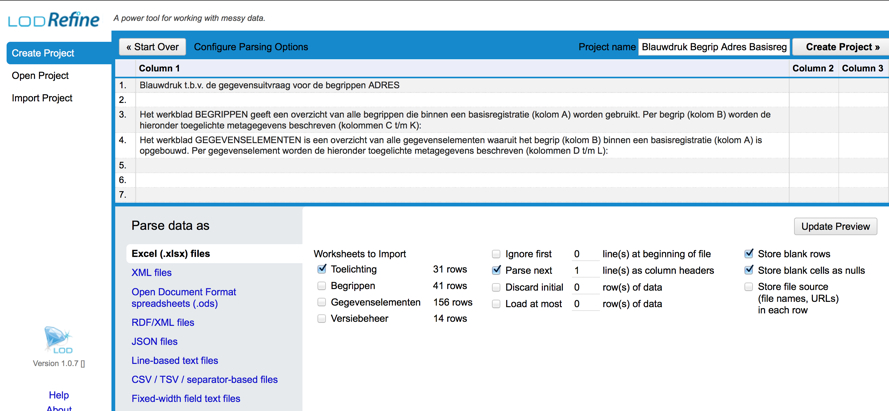

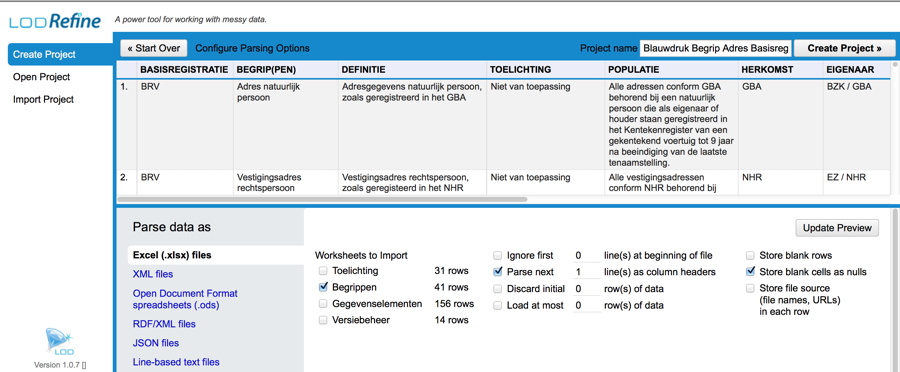

OpenRefine dedected that it is an excel file. It opened the first worksheet. It will consider the first line as a header row and has some defaults for threating blanks. For this exercise we will use the second worksheet ('Begrippen') and skip blank rows.

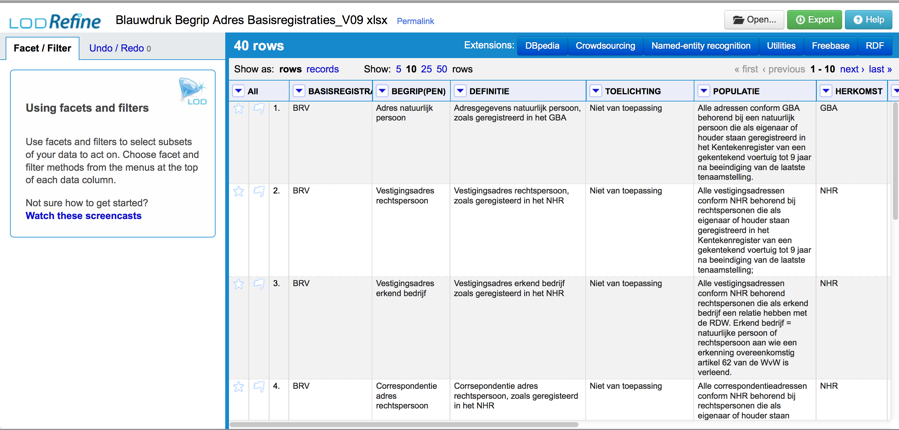

Click ‘create project’ which gives us a view on 40 rows.

Investigate and clean-up your data

[bewerken]

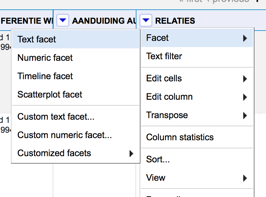

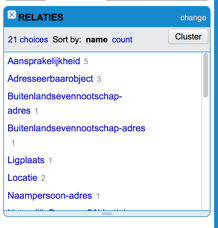

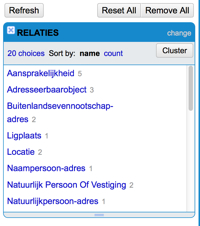

There is a column with name ‘relations’. I want to generate a list of the values used. This can be done by choosing in the dropdown to the left of the column name (Facet → Text facet).

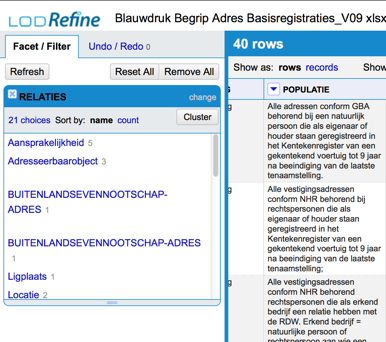

Then I get following facet widget.

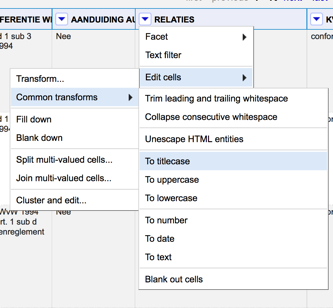

Someone has been using all caps, let's uniformize this. OpenRefine comes with lots of prebuilt functions for modifying and transforming content. For this case I can use the 'To titlecase' function.

Result:

The next point that gets our attention is the strange double of ‘'Buitenlandsevenootschap-adres’. By clicking the edit button next to the string we get a closer look on what the value exactly is.

We see that the second value has a trailing space. Remove it and confirm (Apply) and you get.

These are just a few possibilities to discover and cure the 'messiness' of your data. For more info on this we refer to the regular OpenRefine documentation, tutorials and video's.

Export2RDF

[bewerken]

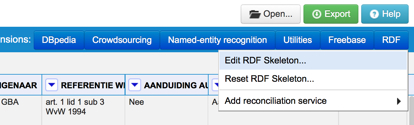

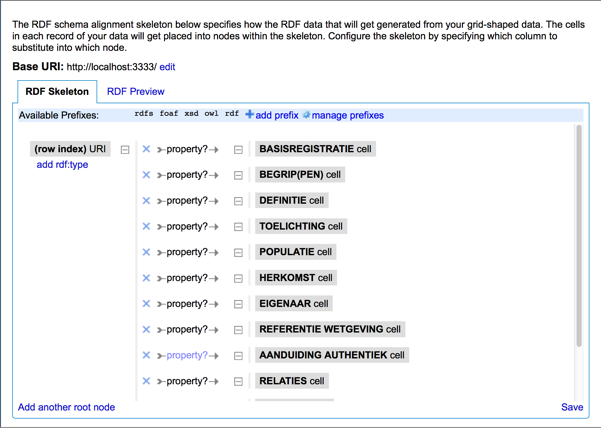

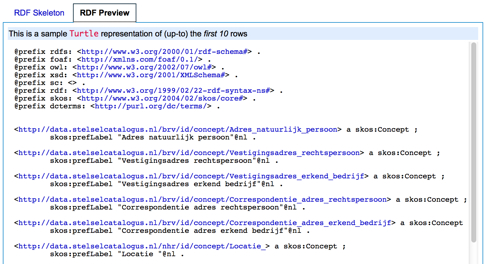

The functionality which is of most interest to us is the ability to map the table we have now to RDF. For this we need to establish a mapping between the cells of our table to corresponding triples. For this we use a RDF skeleton.

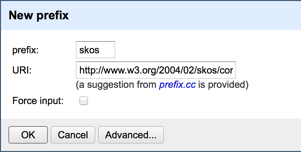

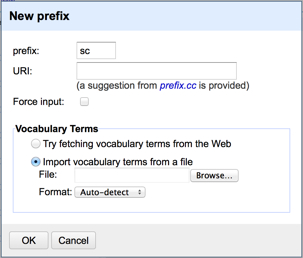

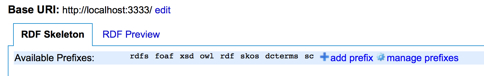

The initial action we should take is adding vocabularies we are going to need and establish prefixes for them. You see that rdf, rdfs, owl and foaf are preloaded. My habit is to always add dcterms and skos. In addition to these publicly available vocabularies we add also a for this project custom made one, which we have on file.

Next step is to assign a unique identifier for every thing e.g. Begrip the table describes. We follow here following URL pattern: http://data.stelselcatalogus.nl/{basisregistratie}/id/concept/{id}.

Since we don't have a real unique identifier in the source, we will build a the {id} part based on field ‘Naam’. To implement this pattern we can rely on the built-in GREL language (Google Refine Expression Language) of LODRefine (http://bit.ly/13O09Ig). Click URI, then you get the RDF Node configuration pane and go direclty to edit/preview.

A little bit of explanation. We concatenate using + 4 pieces of which 2 are fixed strings, hence surrounded with double quotes.

"http://data.stelselcatalogus.nl/" + {basisregistratie} + "/id/concept/" + {naam}

We can fill in the variable {basisregistratie} with the information contained in column 'BASISREGISTRATIE'. The {naam} we find in column 'BEGRIP(PEN). The GREL function to grab cell content is cells['{columnname}'].value

"http://data.stelselcatalogus.nl/" + cells['BASISREGISTRATIE'].value + "/id/concept/" + cells['BEGRIP(PEN)'].value

To finalize we need to lowercase the values of basisregistraties and need to replace the spaces en some other characters in the name, giving us

"http://data.stelselcatalogus.nl/" + toLowercase(cells['BASISREGISTRATIE'].value) + "/id/concept/" + replaceChars(cells['BEGRIP(PEN)'].value,' ()','_')

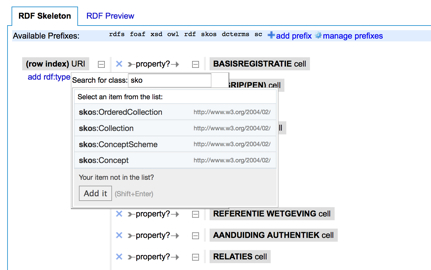

Now that we have established our identifiers, the next step is to indicate what we are talking about. Every item in this spreadsheet is an instance of a skos:Concept. Hence we click add rdf:type in the RDF skeleton and choose:skos:Concept.

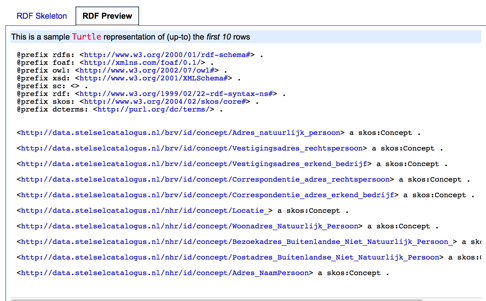

Next check what we have done by going to the RDF Preview.

Our first triples :-).

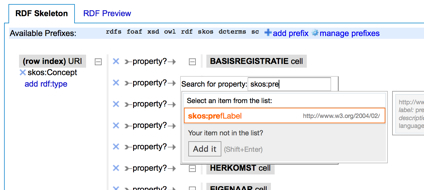

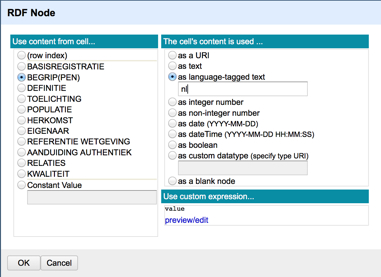

Leaves us to map the existing columns on properties. Just one example, we decide to map the column 'BEGRIP(PEN)' to the property skos:prefLabel.

With following result:

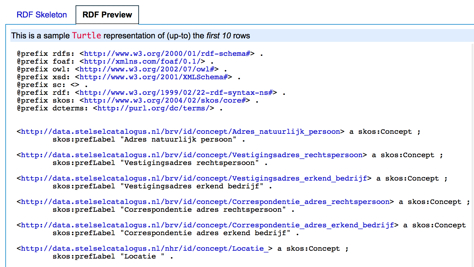

A final adjustment we can make is to indicate the datatype or language of the value of skos:prefLabel.

With result:

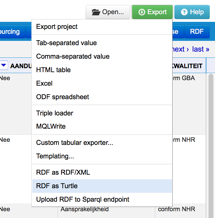

So this should be enough introduction to get you going on your own. Once all wihed mappings are done, the final step is to export to RDF.

Reconciling with other datasets

[bewerken]

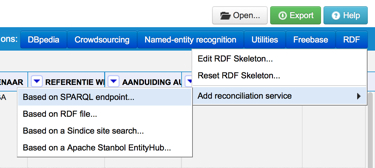

The big idea behind linked data is that we reach out to other already published datasets. We can do so by replacing string values in our own dataset with unique identifiers existing elsewhere. In our example it would be good to replace the string name of the basisregistrations with an already existing identifier as available at a sparql endpoint.

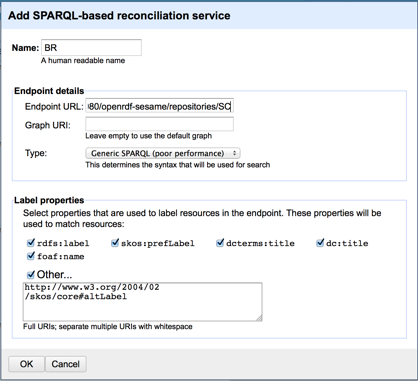

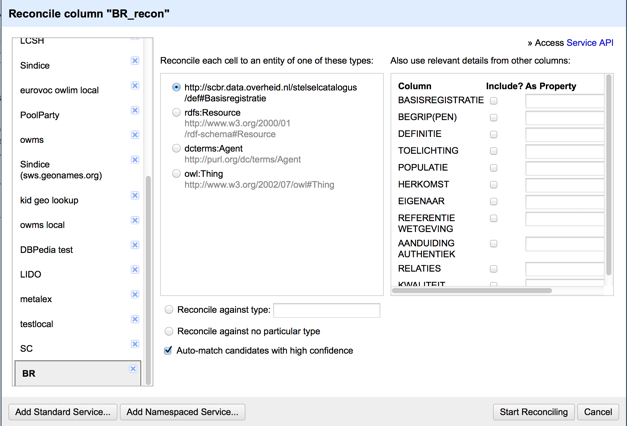

We use for this the built-in reconciliation service.

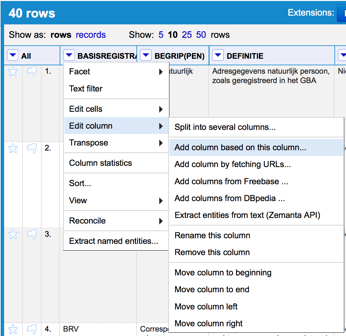

We indicate the address of the SPARQL endpoint we want to use and all the labels that can be used to match the strings we have in the column of our source. In this case we added skos:altLabel. Now that we have defined our reconciliation service, we move back to our table. First we add an additional column based on the existing 'BASISREGISTRATIE' one.

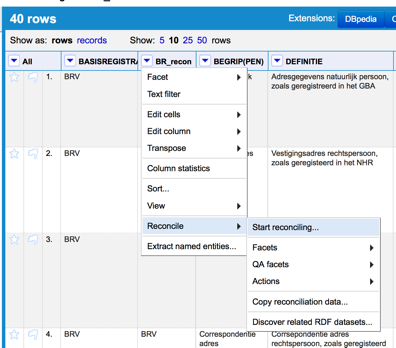

And give the new column a name; we used 'BR_recon'. Then we start the reconciliation using our previously defined reconciliation service with name 'BR'.

LODRefine proposes some classes that fit best your needs. Indicate the one that is most specific. Click 'start reconciling' and the quality of the matching result is indicated in the column header. In our case a 100% match.

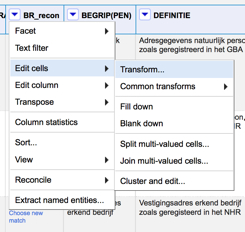

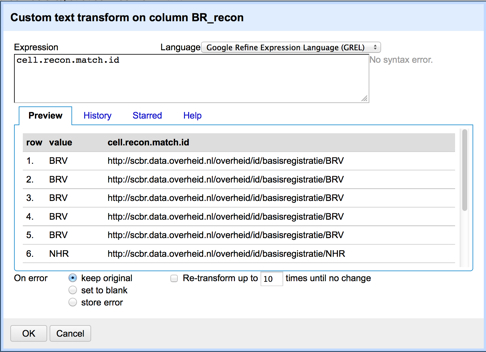

The only thing left is to grab the identifiers. This is done by a transform using following GREL statement: cell.recon.match.id

Conclusion

[bewerken]

LODRefine should be part of any Linked Open Data toolchain. Next to all the cleaning goodness it allows you to map lots of legacy formats (csv, excel, json, xml, …) to be transformed into RDF. Furthermore it allows you to replace string values with identifiers already used in the Linked Open Data web, so your triples get linked into this cloud also.